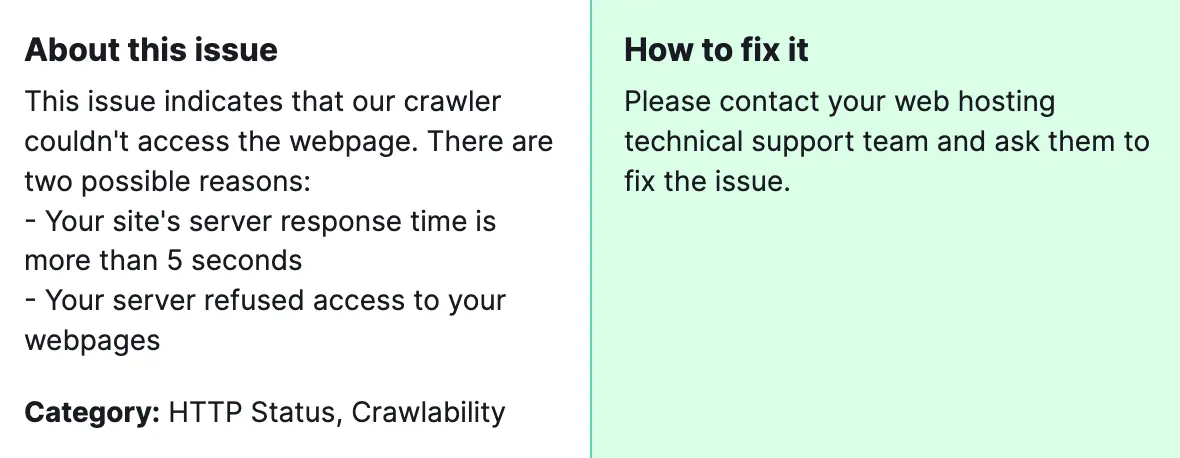

When search engines or tools like SEMrush tell us that some pages couldn’t be crawled, it’s a signal that there’s a hitch somewhere in the digital path leading to those pages. There are several reasons why this might happen, reflecting various issues that prevent search engines from accessing and indexing your content.

This matters because if SEMrush can’t crawl these pages, it can’t analyze them for SEO issues. That means you might miss out on important insights that could boost your site’s performance in search engine results.

Common Reasons for Crawling Issues

This can happen for a few reasons:

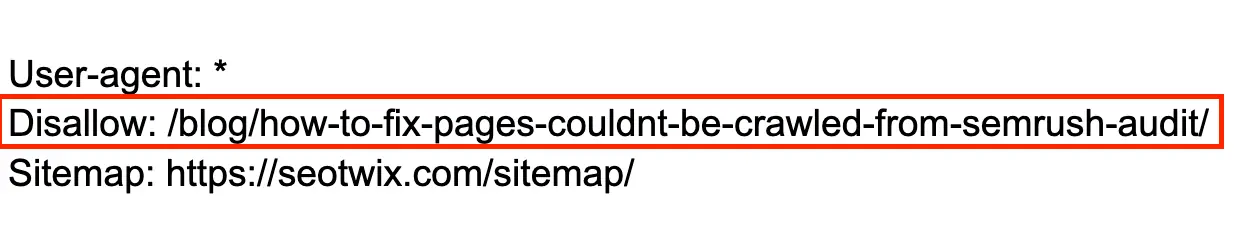

- Blocked by robots.txt: This is a file that tells robots which parts of your site they can or can’t visit. If a page is blocked here, Semrush can’t check it.

- 404 errors: If a page doesn’t exist anymore (and shows a 404 error), Semrush can’t analyze what’s not there.

- Redirects: Sometimes, a page doesn’t stay put; it sends you to another page. If there are too many redirects or they’re set up in a confusing way, Semrush might get lost.

- Server issues: If your website’s server is taking too long to respond or isn’t responding at all, Semrush can’t do its job.

- Heavy pages: If a page is packed with large images or complex scripts, it might take too long to load, and Semrush might give up.

These are some common mistakes, but also Semrush in their audit highlights another 2 issues:

- DNS Resolution Issues: If the DNS server your computer is trying to use is down. Or when a website moves to a new IP address.

- Incorrect URL Formats: If there are common mistakes include typos, using the wrong domain extension (.com instead of .net), or missing parts of the URL.

How to fix “Pages couldn’t be crawled” from Semrush Audit

1) Check Your robots.txt File

Make sure you’re not accidentally telling search engines to keep out. You can usually find this file by adding /robots.txt to the end of your domain.

2) Monitor Your Server’s Health

Keep an eye on your hosting. If your site is often down or slow, it might be time to talk to your hosting provider or consider switching to a better one.

3) Fix Redirect Loops and Broken Links

Clean up your website’s paths. Make sure that all redirects lead somewhere useful and that there are no dead ends. Here’s how to fix redirect chains and loops.

4) Speed up your site

Optimizing images, minimizing code, and considering a faster hosting service can improve load times.

For DNS Resolution Issues:

1) Check Your DNS Settings

Start by visiting your domain registrar’s website (where you bought your domain name) and review your DNS settings. Ensure they point to the correct server where your website is hosted. If you’re unsure, reach out to your hosting provider and ask for help.

2) Use a DNS Checker

Free tools online let you check if your DNS settings are propagating correctly across the internet. Use one to see if there are any glaring issues.

3) Wait and Check

Sometimes, DNS changes take a while to spread across the internet. If you’ve just updated your settings, give it up to 48 hours.

For Incorrect URL Formats:

1) Review Your Sitemap

Your sitemap guides search engines (and tools like SEMrush) to navigate your site. Ensure it’s up-to-date and that all URLs are correct and lead to actual pages on your website. Here’s a guide how to fix 4XX pages.

2) Check for Typos

A simple typo in a URL can throw everything off. Double-check your website’s URLs for any misspellings or errors.

3) Ensure Consistency

Make sure you’re consistent with ‘www’ and ‘non-www’ versions of your URLs, as well as ‘http’ vs. ‘https’. Mixing these can confuse crawlers.

4) What to Do Next

After taking these steps, rerun the SEMrush audit. If you’ve addressed the issues, your site should be more accessible, and SEMrush can do its job. However, if you’re still hitting a wall, don’t hesitate to reach out to SEMrush support. They’re there to help, and sometimes a second pair of eyes can spot something you might have missed.

Dealing with website issues can feel like a maze. But remember, every problem has a solution. The “Pages couldn’t be crawled” error in SEMrush audits is common, and now you’re equipped to tackle it. Stay patient, and be thorough, and your website will be welcoming SEMrush audits with open arms in no time.