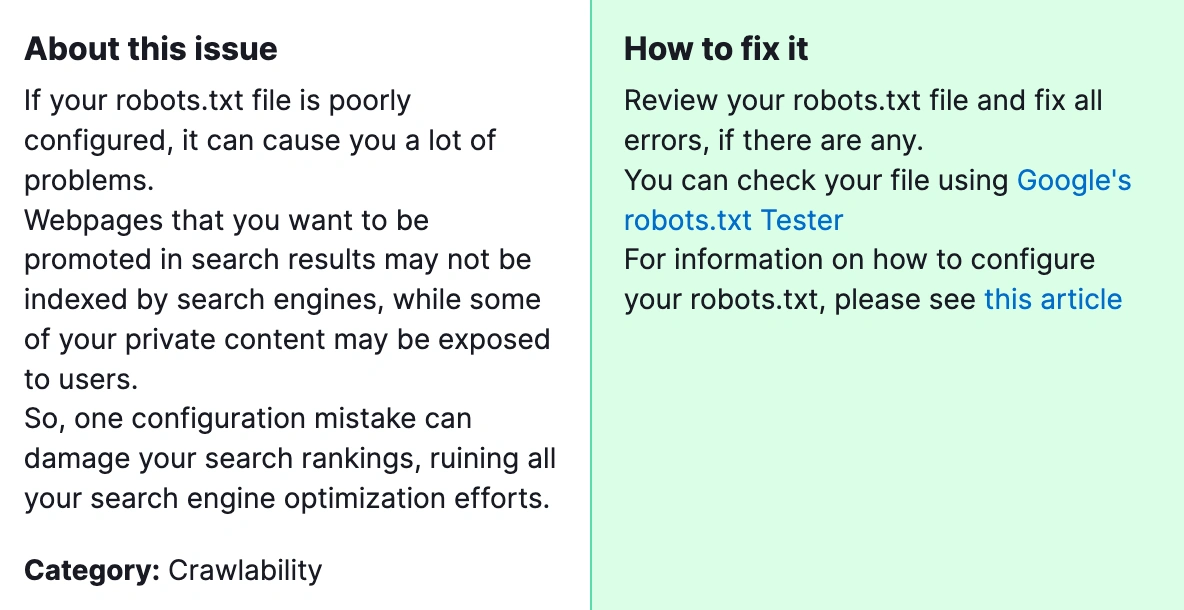

When running a Semrush Audit, encountering a “Robots.txt File has Format Errors” message can be a bit daunting, but it’s a critical issue that needs to be addressed promptly. The robots.txt file is a crucial part of your website’s SEO framework. It instructs search engine robots on how they should crawl and index pages on your site.

Why is it important for you to fix this error? Format errors in this file can lead to improper indexing of your website, which might prevent important pages from appearing in search results or, conversely, allow search engines to index pages you intended to keep private. Fixing these errors ensures that your website is crawled and indexed correctly, which is fundamental for achieving optimal visibility in search engine results pages (SERPs).

How to Fix “Robots.txt File has Format Errors” Detected by a Semrush Audit?

Here are the steps to fix this error:

1) Locate Your robots.txt File

First, you need to find your robots.txt file. It’s typically located in the root directory of your website and can be accessed by appending /robots.txt to your domain.

2) Identify the Errors

Semrush’s Audit report should give you some clues about what’s wrong with your robots.txt file. Common issues include syntax errors, disallowed entries, or commands that search engine bots do not understand.

3) Understand the Syntax

Ensure you’re familiar with the syntax rules for a robots.txt file. Each rule is composed of two main parts: a User-agent line specifying which bot the rule applies to, and one or more Disallow or Allow lines telling the bot which paths it can or cannot access.

4) Use a Robots.txt Validator

Before making any changes, use a robots.txt validator (many free tools are available online) to pinpoint the exact errors. These tools can help you understand what needs to be fixed by providing detailed error messages.

5) Edit the File Carefully

Open your robots.txt file for editing. Correct any syntax errors, remove disallowed entries, and ensure that you’re not inadvertently blocking important pages from being crawled. If you’re trying to control the crawl on a more granular level, consider using Allow directives or adjusting your site’s meta tags and headers for individual pages instead

6) Test Changes Before Uploading

Use the robots.txt tester tool available in Google Search Console to test your changes before uploading the updated file to your server. This tool can help you see how Googlebot interprets your robots.txt file and whether any URLs are blocked that shouldn’t be.

7) Upload the Updated File

Once you’re confident that your robots.txt file is error-free, upload it to the root directory of your website, replacing the old file.

8) Resubmit Your robots.txt to Search Engines

After uploading the corrected file, go back to Google Search Console and any other search engine webmaster tools you use, and submit your updated robots.txt file. This prompts search engines to crawl your site again using the updated directives.

9) Monitor the Results

Finally, keep an eye on your website’s crawl statistics in Google Search Console and other analytics tools. Look for improvements in indexing and ensure that no unintended pages are blocked or allowed.

By addressing “robots.txt file has format errors” promptly and correctly, you ensure that search engines crawl and index your site effectively, which is vital for maintaining and improving your site’s visibility and SEO performance.

If the above solutions do not solve your problem, please contact our team for further assistance.